Design Challenge - Hill

We started the project off by studying a hill we were given from our stakeholders:

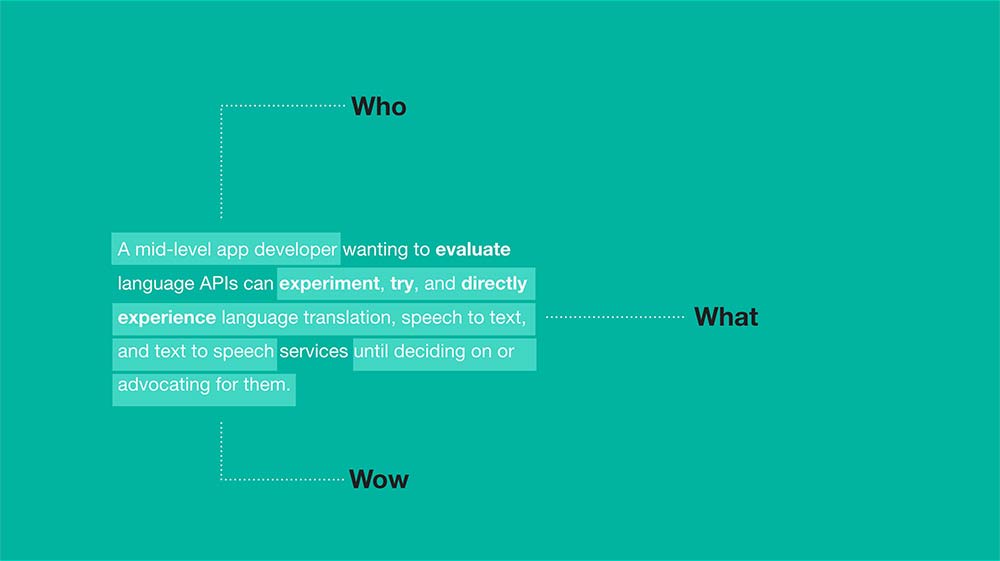

An Application Developer can deeply understand Watson Cognitive Services so that they can utilize them in their own applications (Try + Buy).

Hills are statements of intent written as meaningful user outcomes. They tell you where to go, not how to get there, empowering teams to explore breakthrough ideas without losing sight of the goal.

My role

As a User Experience Designer on this incubator project, I worked with my team to uncover the persona & the pain points using design thinking. Later, my role evolved into an advocate for the user and I drove the design for the developer experience.

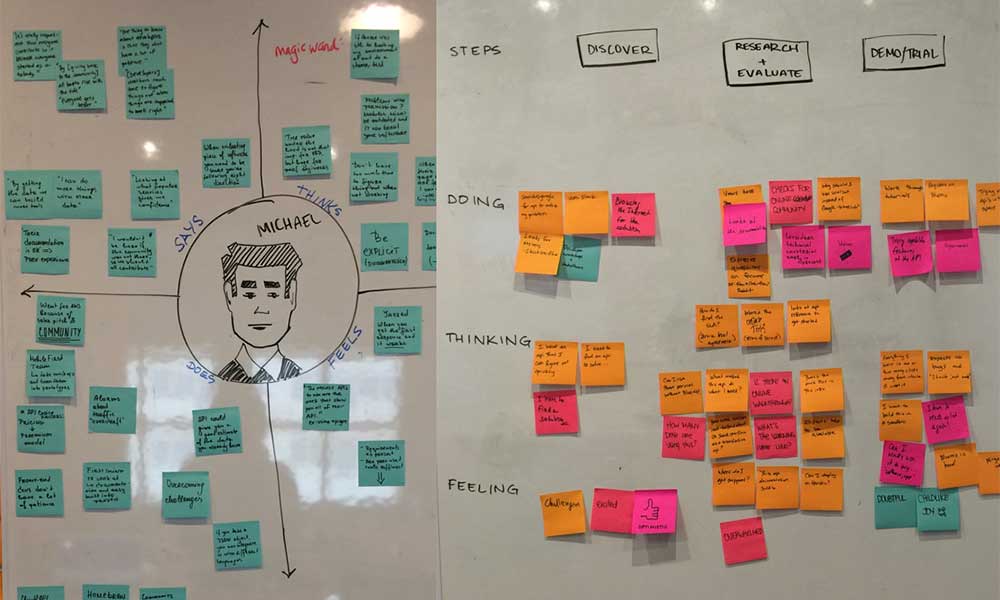

Research

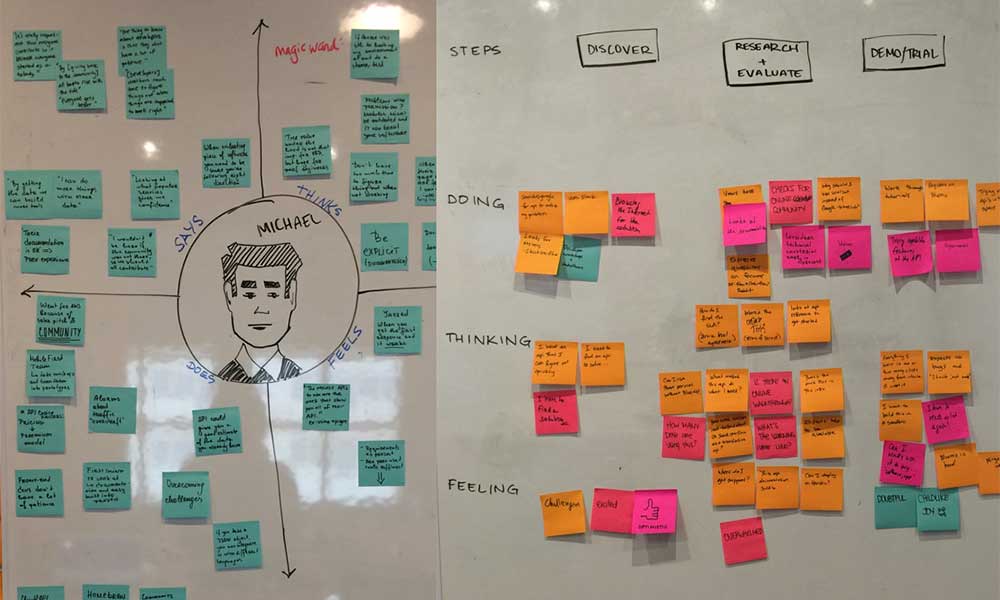

Nobody on our team ever designed an experience for developers. Therefore, we all needed a common understanding of their world. We took part in a bunch of interviews with app developers to fully understand their motivations when exploring APIs and their day to day work life. While interviewing them, we also poked into difficulties with the Watson Developer Cloud, to understand how it could be improved. The outcome of this exercise was an Empathy Map, As-is Scenario and a Persona.

"How painful is this API?"

- A developer at IBM

"Is it worth going through that pain because there might be some potential useful thing about it?"

- A front-end engineer at IBM

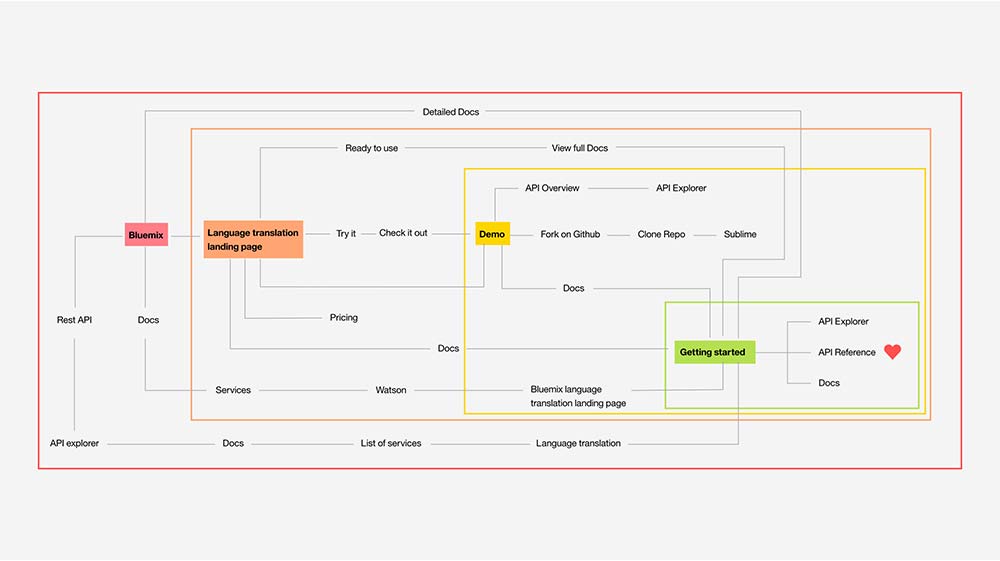

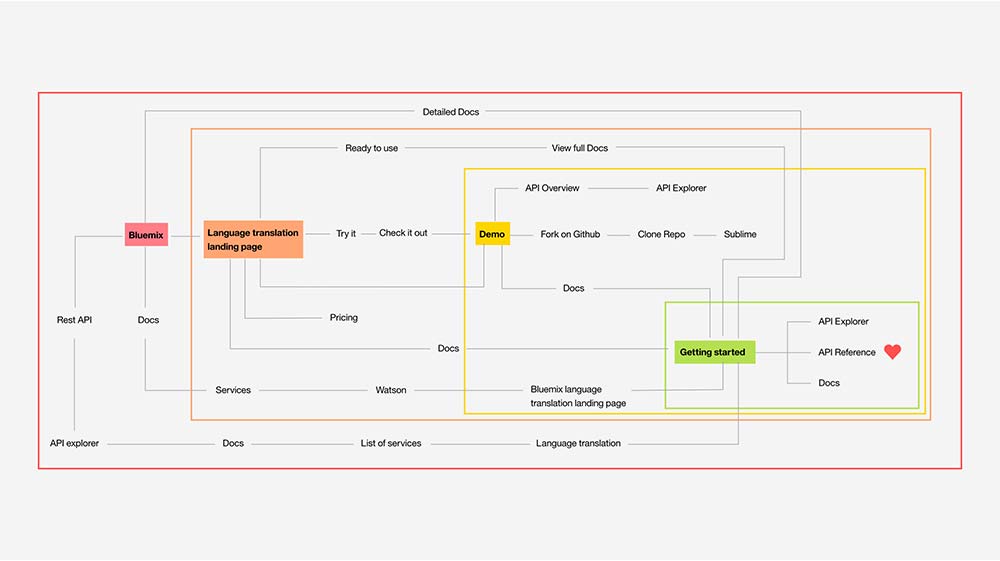

We also reviewed how difficult it is to learn about the Watson Language API and we found out that there have been some significant issues with the navigation. Links with the same name actually led elsewhere - it was just confusing.

Research Findings

After our research, we came to conclusions:

- A good API lets developers try out the code quickly.

- Accessible and understandable documentation is necessary.

- Navigation on the Watson Developer Cloud needed to be fixed.

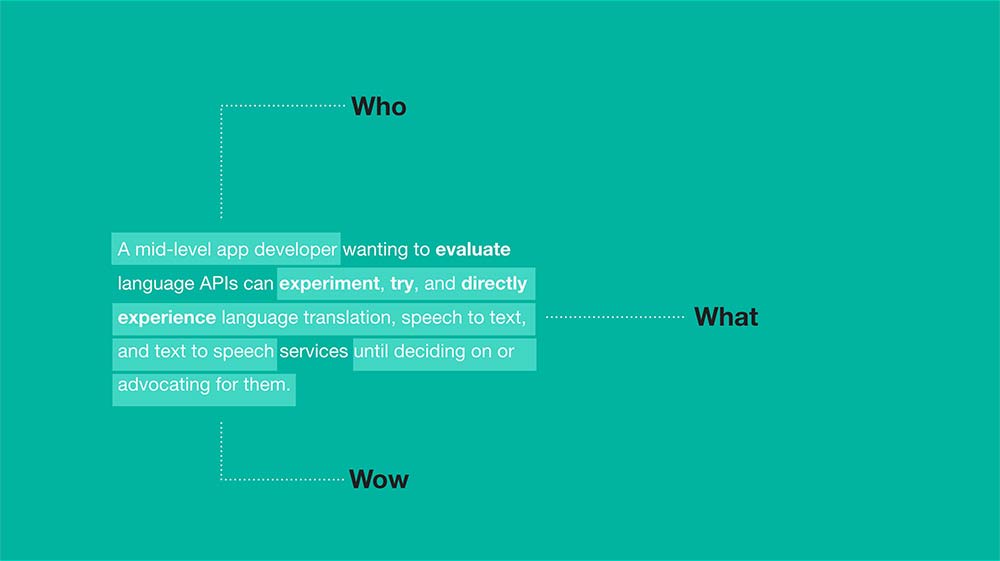

Updated Hill

After speaking with stakeholders and conducting a number of interviews, our hill soon turned into a mountain filled with cliffs. As we began to redefine our understanding of Watson, it became apparent that IBM Cloud (former IBM Bluemix) was a necessary component of our “Try and Buy” objective. Unfortunately, that just wasn't an option given the duration of the project. In a joint-agreement with our stakeholders, we axed off Bluemix and redefined our hill, changing Try+Buy to Try.

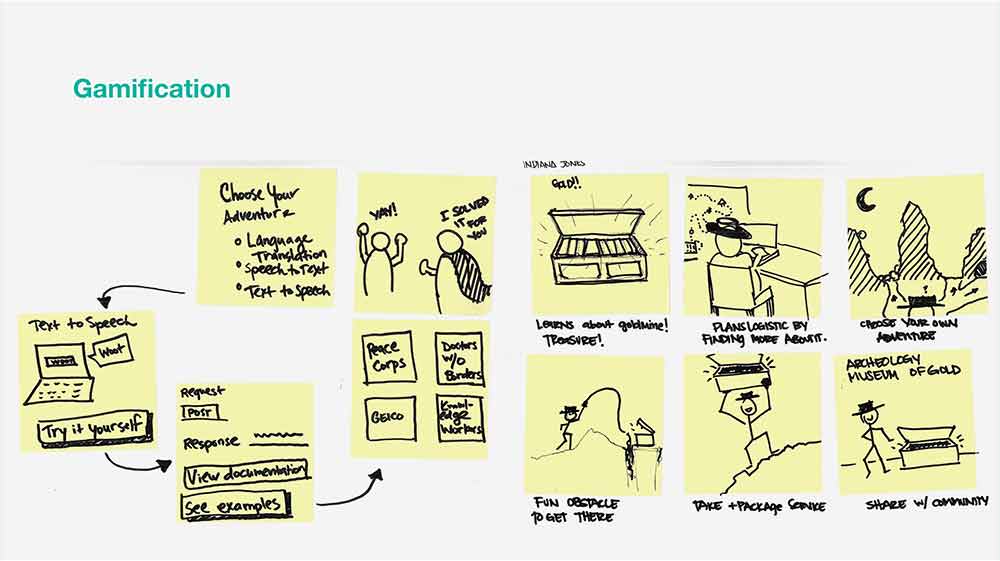

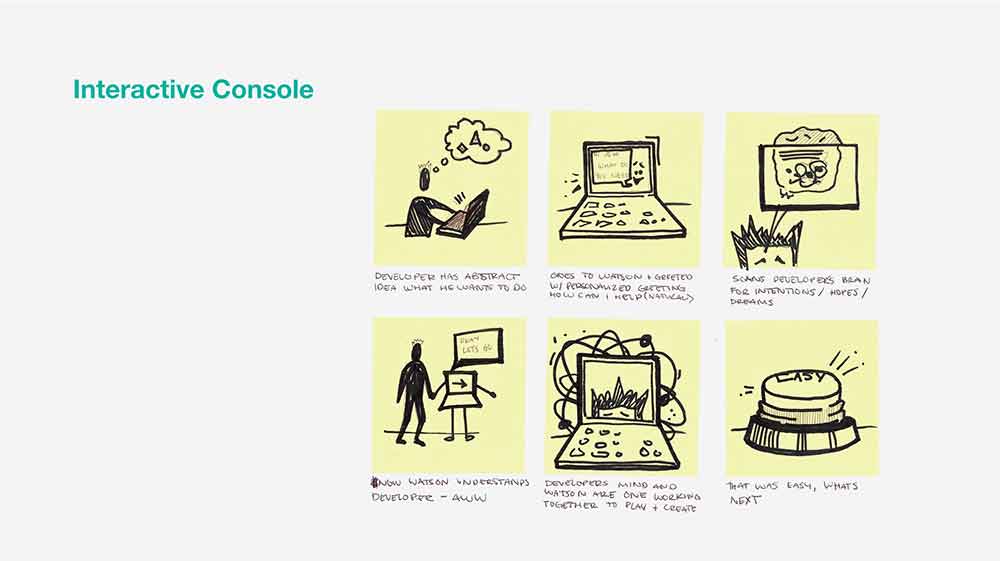

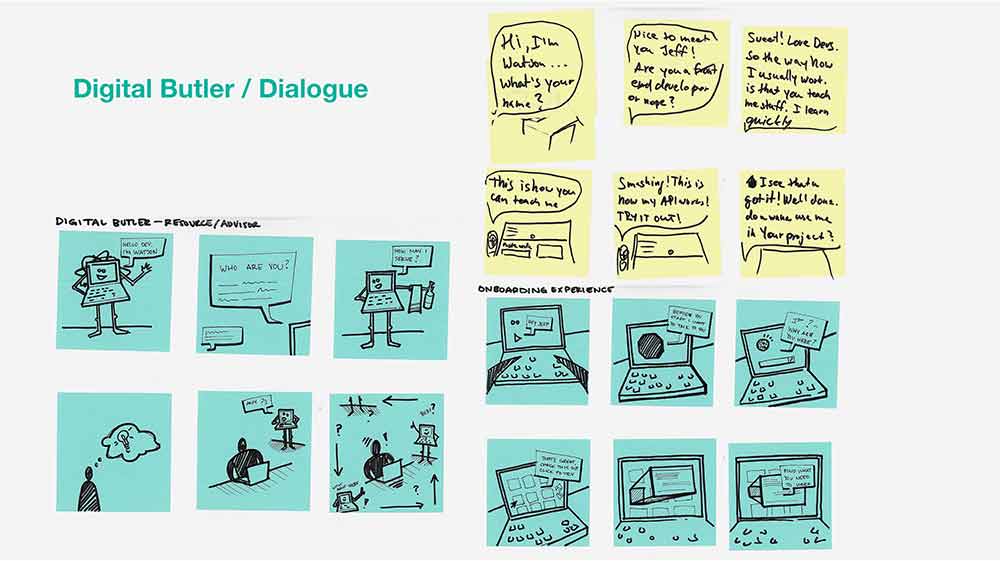

Storyboards

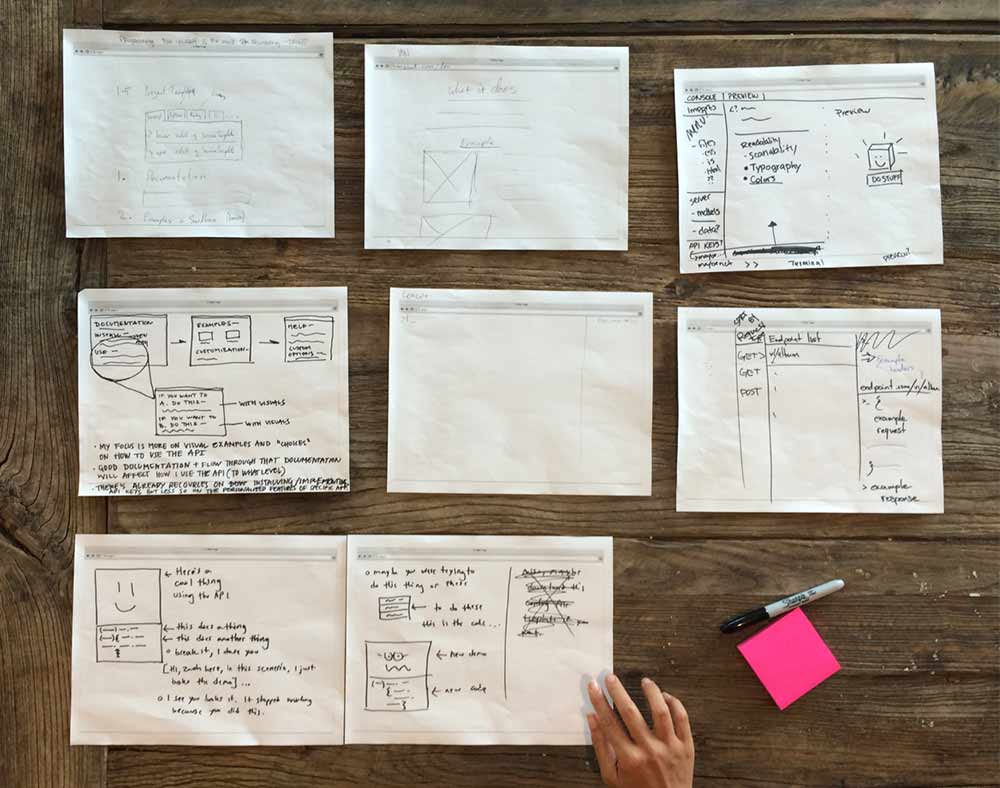

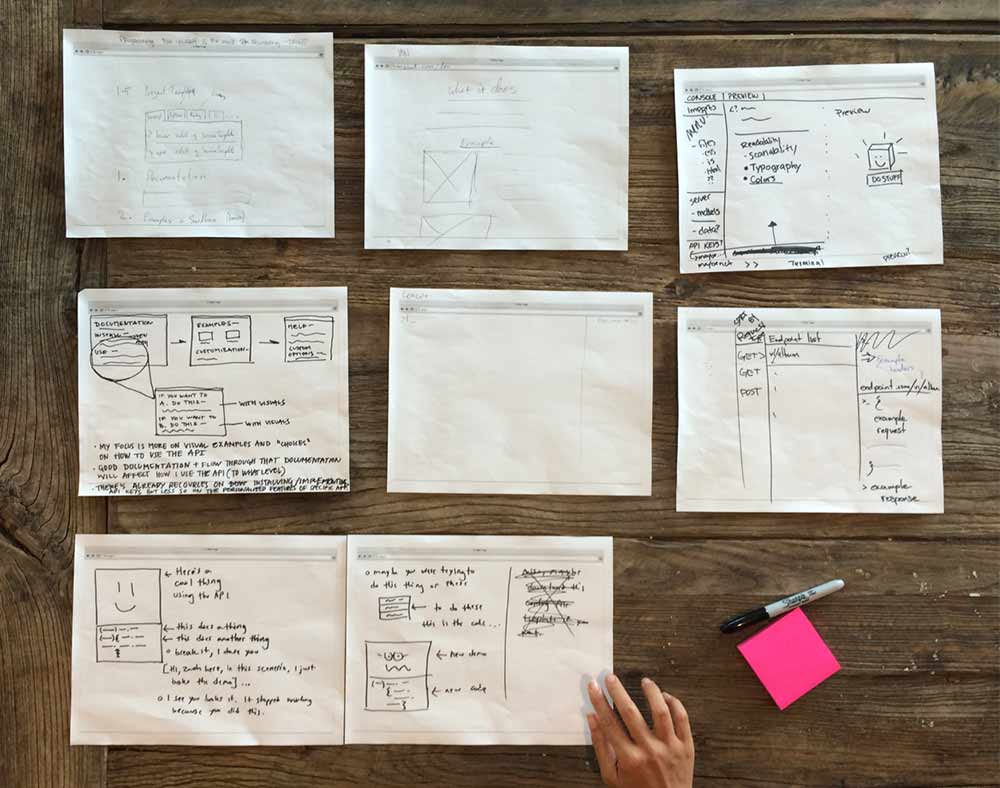

Before diving into wireframes, we explored ideas of a Gamification, an Interactive Console, and an Assistant in Storyboards.

Wireframes

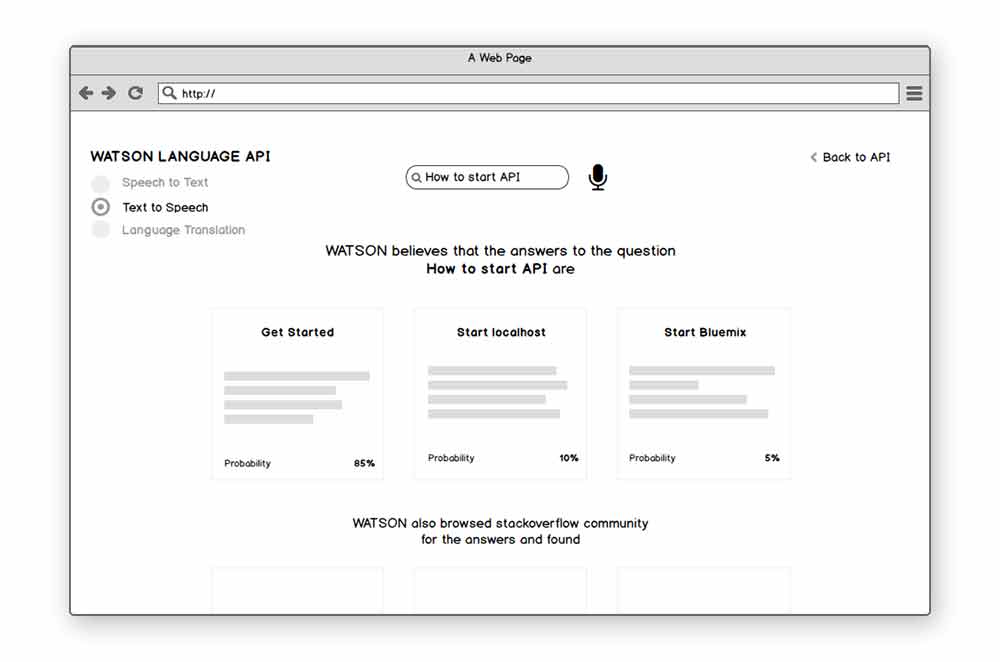

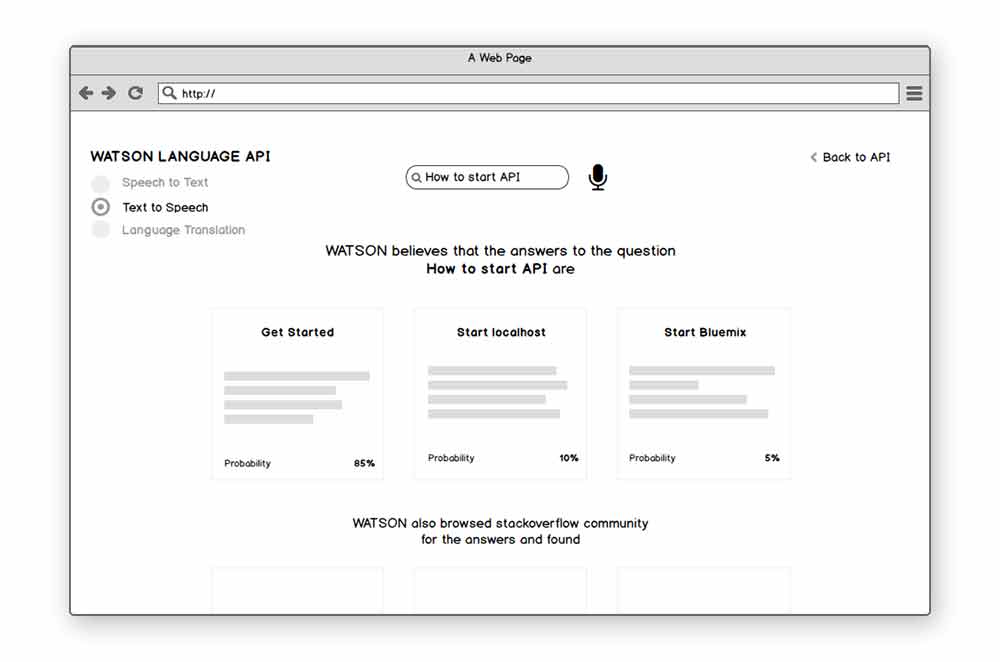

Our initial hypothesis was that we thought we could teach developers how to use the Watson Language Services by actually using Watson AI. We wanted to showcase what is possible with it and to clearly show how Watson sets apart from the other competitor services.

After giving out stakeholders a walkthrough of the initial wireframes, we learned that having an embedded Watson in the website would be a very intense investment and it might not be the right thing to do. They said it was a science fiction. It would have to be trained for all sorts of questions, and our main user might not be interested in that kind of interaction. That is why, we had to pivot, go back to pen and paper and sketch out a number of different variations with focus on the API docs and console.

Ultimately we got the so-called AHA moment when we realized we might need to strip all the fancy AI interactions and just let devs explore the code. Then, we went through one more pivot, but this one was a step in the right direction.

My design decisions

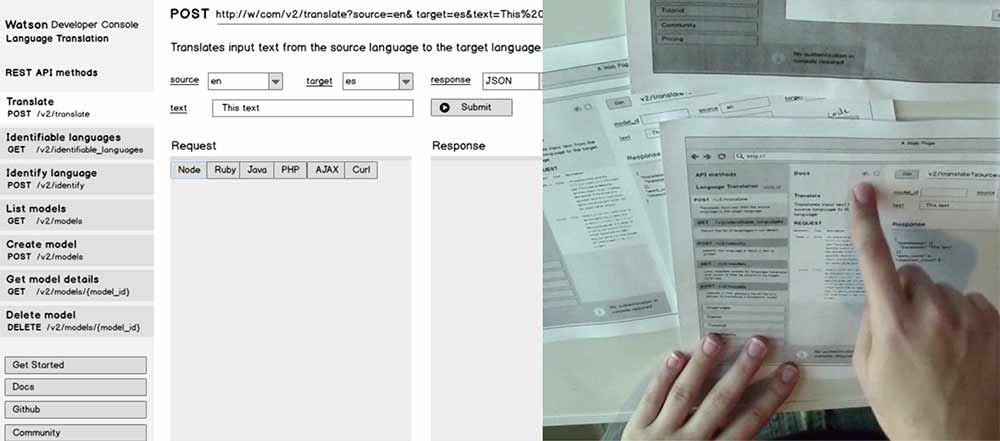

In the 5th week of the project, I have delivered a clickable low-fi prototype, which helped us to carry on. See my design decisions below as well as the prototype.

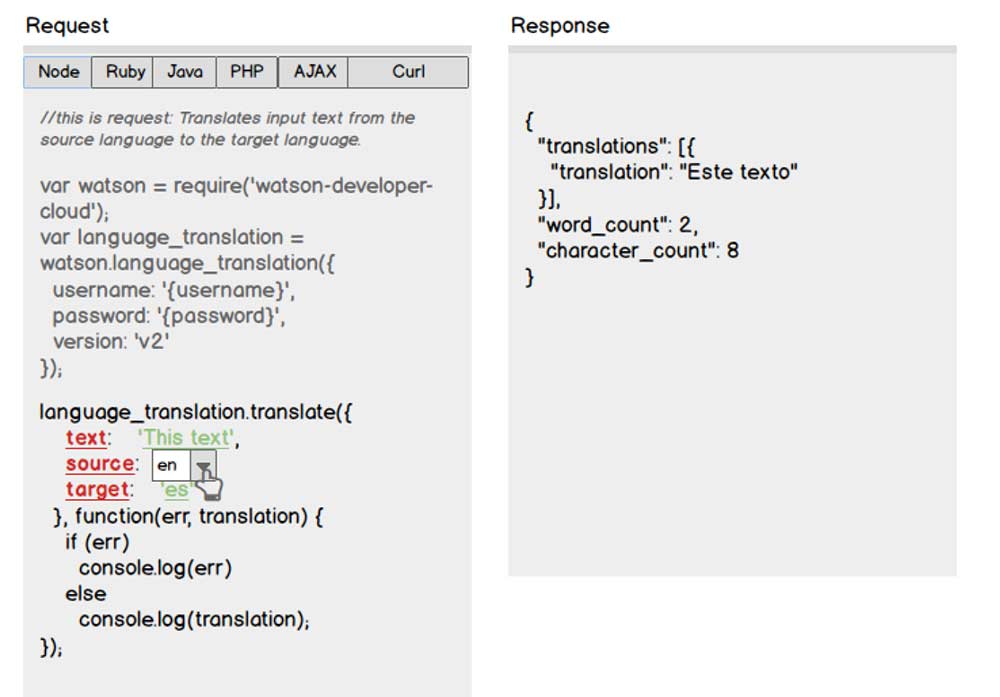

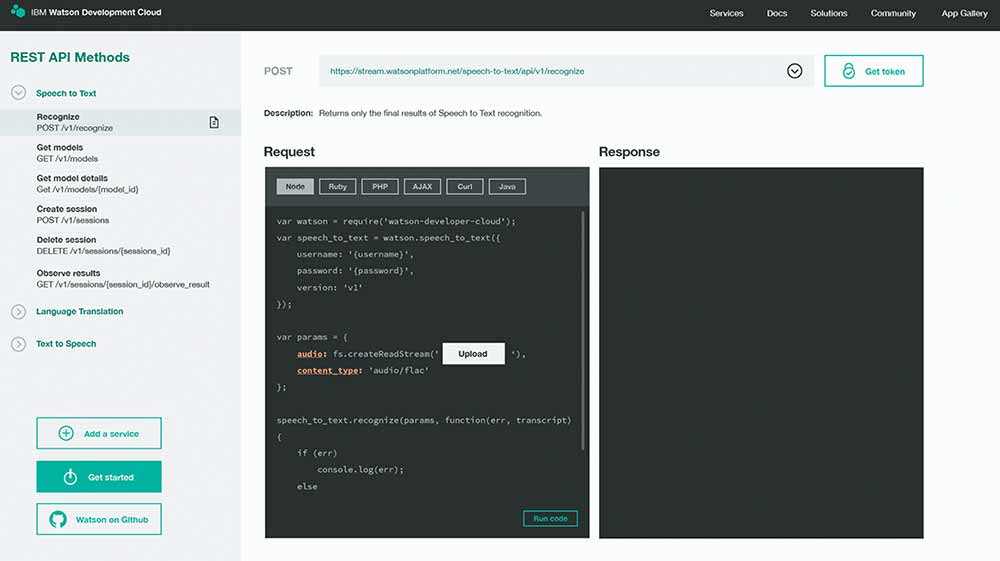

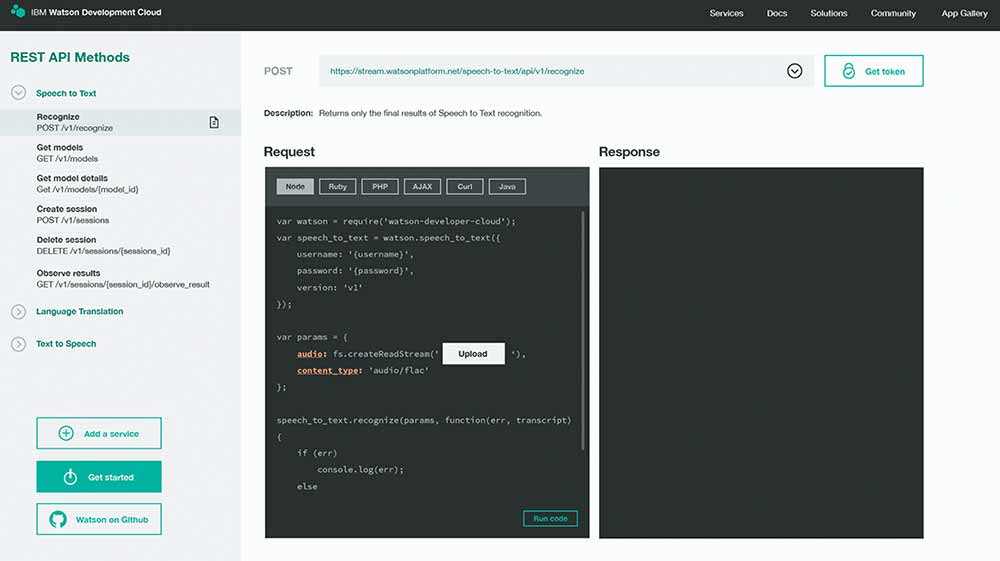

No bullshit -> Interactive Console

As mentioned above, the user testing uncovered that the developers needed to jump into code as soon as possible. While voice or chatbot interactions would most likely excite non-technical people, the developers would be discouraged. That is an important point because we were building for developers. After fully understanding it, I had to advocate for the new direction within our team, which turned out to be a challenge.

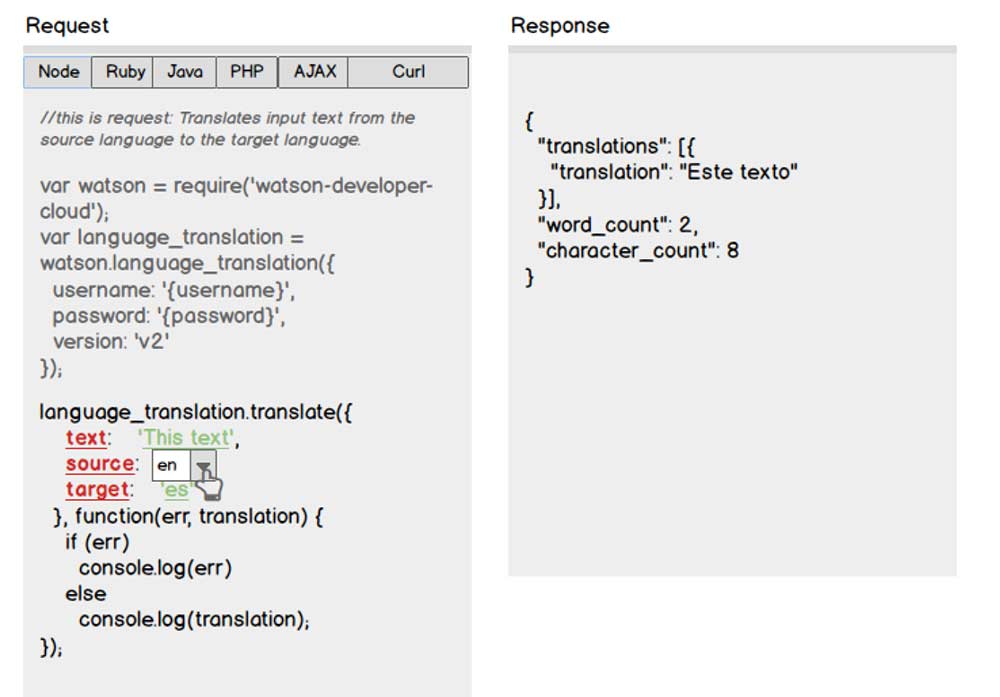

To convince my team, I designed a wireframe which was sort of a combination of a console and documentation. While a developer might browse it as a casual documentation, he/she can also directly try out the API calls without opening their own console, getting a registering for IBM Cloud and getting a token. That streamlined the whole process. The developers could now see what responses they got after playing with requests in multiple languages.

You might ask: "but consoles usually involve a lot of coding right?" Well, not in this case. The console would pre-populate the request and the developer can jump in and change some variables only. For example, an audio file could be uploaded for further translation, or there would be a dropdown for selection of the source language.

User Feedback

- “I want it now!”

- “Wow! Amazing”

- “I’m clapping guys, this would save my time so much. I could literally try this in 5 minutes instead of struggling for 2 weeks.”

Clear Navigation

From the research, we knew that one of the key pain points was the navigation. So when I worked on the latest iteration, I paid attention to keep the naming consistent and have the links visible all the time so the users can get anywhere they need to.

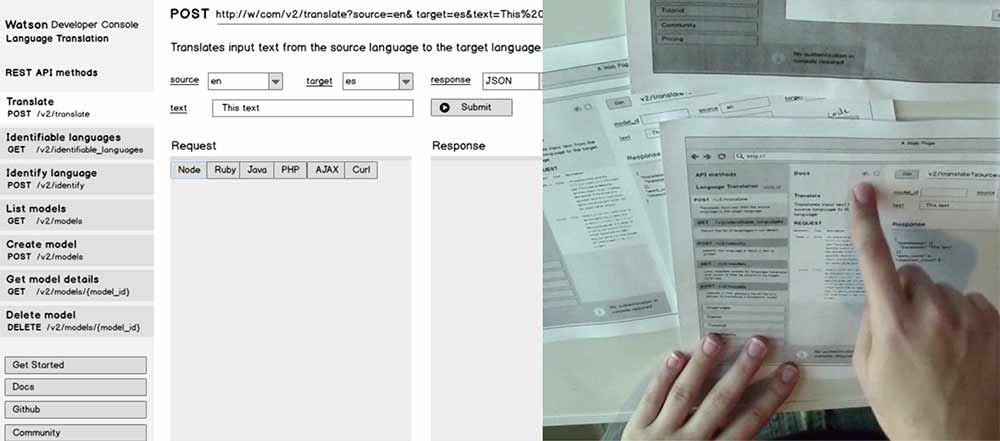

Lo-Fi Demo

See below a low fidelity demo which was used to convince the team and get feedback from our users.

Check out lo-fi the prototype here.

Hi-Fi Demo

In the last sprint, I cooperated with a visual designer to move the designs from wireframes to actual mockups. The result of that effort was a clickable prototype. Below is an Invision prototype if you want to play around, but please note that sound translations of the experience are missing.

Check out the hi-fi prototype here.

Reflection

At the end of the 6-week project, we delivered our Playback 0 to our sponsor users, stakeholders, general manager of IBM designer, as well as other important peeps from IBM Design and 65 IBM Bootcampers. The Watson team took over our work as one of the concepts for the future of their environment and carried on evaluating how it could be implemented. As far as I remember they liked the idea of having an interactive console, but they found out that an actual need was to have a better terminal experience. Nevertheless, it was an exploration for their team, they learned something more about tacking this problem differently.

When looking back, we worked great together as a team. We all helped our Design researcher with the research, then we all contributed to the mapping of the current as-is scenario and future to to-be scenarios. The next steps were storyboarding, wireframing and prototyping. That is where I helped my team the most and drove most of the design decisions.

When reflecting about on experience, I have learned a lot about how IBM does Design Thinking, what it is like to design for a Developer Persona and IBM Watson works. That created a base for my work at IBM Design.

Explore next project: PORSCHEvolution